What's Dall-E and why are people talking about it?

There has been a lot of buzz surrounding the release of Dall-E 2 on social media in the recent weeks. What the heck is a Dall-E for those of you that have not yet come across this word. Dall-E is an AI algorithm that takes text as an input to generate visual images. At face value, some of you may be thinking "who cares...?", and rightfully so! Well why I care (as well as a large portion of the internet) is because these AI generated images can be shockingly realistic.

As the world began to see the influx of Dall-E generated images with their associated prompt used to generate, the reaction was mixed. One side shouting about how amazing this technology is and how AI may take over humans sooner rather than later, while the other side screamed that this is not impressive just a show of basic knowledge we've known for a while. So where do I stand? I'm not sure I can give an answer at this moment, but instead of internally exploring the questions I chose to collaborate with other fellow humans on such profound questions. It all begins when Eros was given early research access into Dall-E's platform;

How does Dall-E work? AI image generation 101

Before we get into Dall-E's platform and the results of our early access, we'll go through a very high level explanation of the technology. For more details, please check out this article for a more in-depth technical discussion of the AI. Let's start off with how Dall-E works

1) input text description to be generated

2) Dall-E runs its model

3) 6 images are output

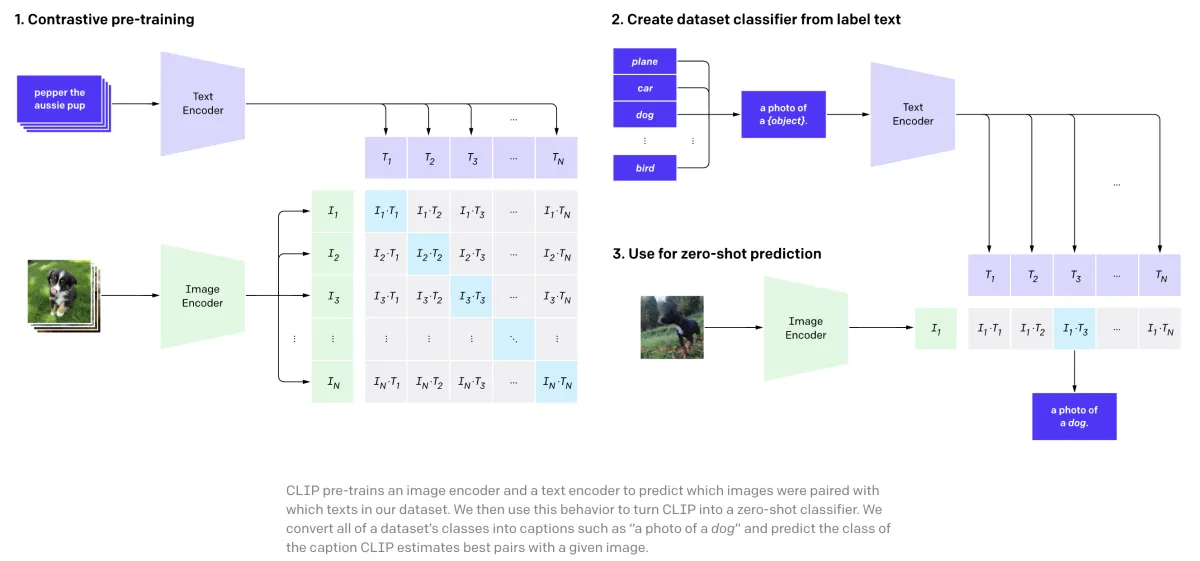

Pretty simple, yeah? The first big question in my head would be how does Mr.Robot relate words to images if everything in computers are 0's and 1's? The answer to this, like many AI solutions in recent news, is a neural network! A neural network is a mathematical representation of singular nodes, or neurons, and their associated individual weights, or relative strength. Like the name implies, this model is based off of neurons and the human brain itself. I'll be discussing neural networks in further blog posts, but for now believe me when I say that Open AI created a very strong neural network that maps words to images extremely efficiently. Below is an image from Open AI showing how the neural network learned by analyzing millions of images that had a text tag embedded within;

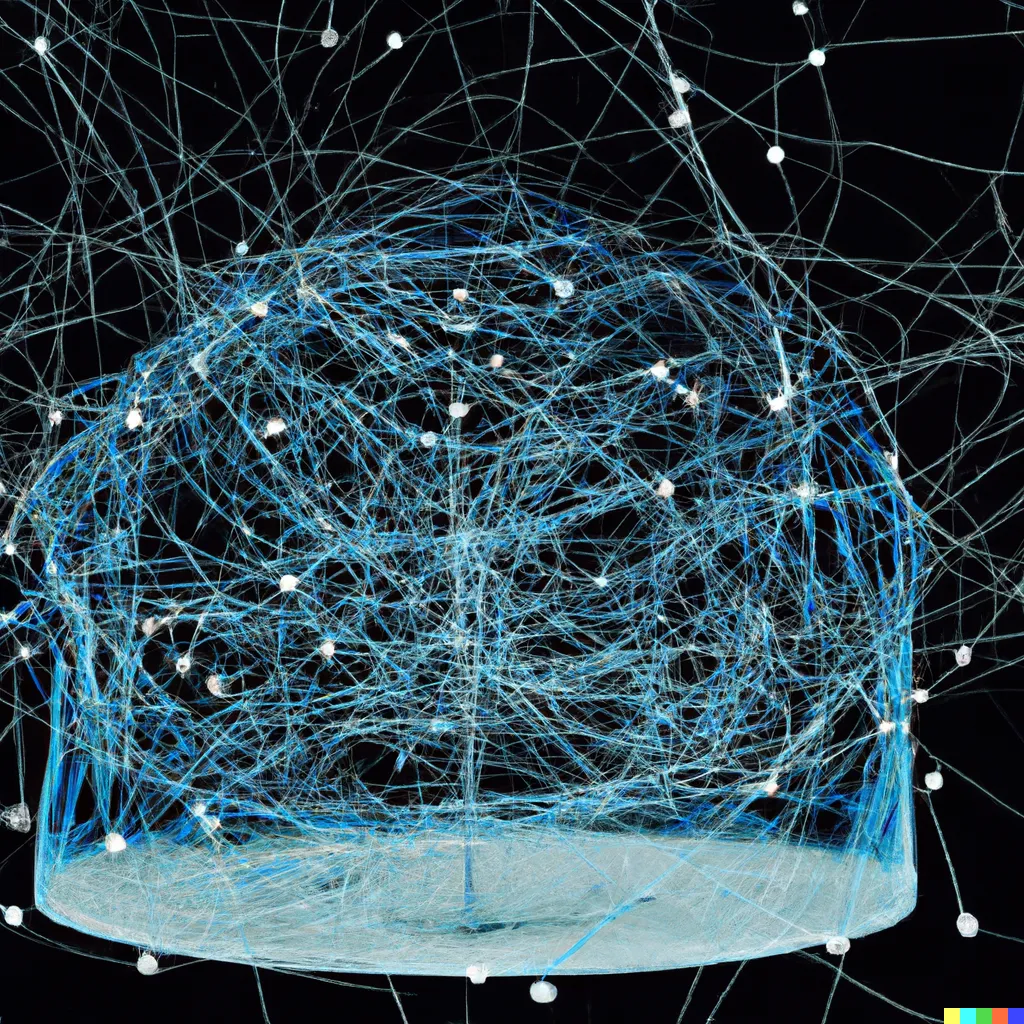

There's a lot in the above image, far more than the average reader will want to understand. For those of you that agree with the last sentence, here's a summary; a lot of images + text tags were fed into a robot. A human mind manually tagged an image with an appropriate tag. In this manner, the neural network is simply learning the connections between certain words and images that a human provided. The robot begins chunking the words into categories and the images into different layers of red, green, and blue pixels. By doing so for millions of images, the relationships between the categories and the pixels were mapped to create something that looks the image below;

Still with me? Almost done with all the technical gunk. Now that we've created Dall-E's neural network, how does it work? Through processes called encoding and decoding, which simply mean transforming input to output, Dall-E uses a neural network with 12 billion nodes and trained on 250 million images with text tags to facilitate image generation. For reference, the human brain has over 100 billion neurons and 100 trillion synapses (connections between neurons) trained on billions of years of life here on planet Earth. Immediately, you can sense where I stand on the "intelligence argument" of Dall-E.

With that off my shoulders (I was holding that jab in), Dall-E is still an impressive showcase in what large sized neural network models can do on a specific task. Training Dall-E took many months, a load of energy, and millions of dollars. Needlessly to say, this is not a sustainable nor accessible way to do AI. This is a demonstration on compute power derived from recent advancements in deep learning that were a result of a technological spillover from GPU's (source). I do not think people should be worried about AI replacing humans anytime soon. Until a large paradigm shift occurs in the mathematics of AI or quantum computing becomes accessible, these neural networks are input output machines.

I wasn't here for tech talk, where's the AI art buddy?

Enough of technical talk, let's get into the AI generated art. The first thing people should know about Dall-E is how to provide the correct input. This turned out to be the hardest skill to learn while using Dall-E; how to really communicate with the AI? Just like in real life, words are interpreted differently from person to person, Dall-E is no exception. This simple question has taken weeks, but over time I've developed a sense for what to ask Dall-E. I, as the artist, started better using my artistic tool, AI.

The results of this experiment were quite surprising. Coming into this, I previously had limited to no artistic experience or practice. After learning how to properly communicate with Dall-E, I was able to generate images that somewhat matched the idea my brain was attempting to create.

The experiment started in Seattle, WA during a glass blowing class. I have had the desire to explore the creative side of myself for years and thought this class would be a good opportunity to explore AI creativity. The class started with an instructor showing us various pieces of glass we could use. After looking at what pieces I could use, I decided to create a panda.

I had no idea where to begin. I can't draw a panda using a pencil, how was I supposed to create a glass panda? I couldn't even begin to fathom what it could look like... that's where Dall-E came in. I opened up the Dall-E app and input "glass panda mural". Voila... this is exactly what my heart desired but my brain could not think of;

Dall-E was actually able to generate an image that I thought captured my desire quite well. After seeing the AI generated panda, I got to work to bring this idea into this physical world. Below is the final result;

I would call this a successful experiment. Dall-E was able to provide me (the human) with enough of a base to actually create something out of the box. I will state, however, that I still firmly believe these AI models will not replace humans anytime soon. They should be seen as a tool, like an artist's paint brush. Enabling a human to enhance their creativity, as an aid and tool.

I hope you all were able to learn a bit more about generative AI, Dall-E, and neural networks through this blog post. Please reach out with any questions, feedback, or concerns at mandeep@erosconsulting.net

mandeep@erosconsulting.net

(510)996-2400

Copyright Eros Consulting -- All Rights Reserved

To be a bridge between the world of ideas and the reality of life, one solution at a time.